Cache keep frequently used data in your system, take an instance of user bill record when a user log in, His credential is used to fetch his bill history if we need to use this set of data in multiple places.

Then we can save the data in a cache, and save the round trip to fetch the data from the database each time we need them, except in the case where the data change. Why do we need to use cache

- To save network calls

- Avoid recomputation

- In the end cache are design to increase the speed of response to client request.

Two questions we should worry about when designing distributed cache System

- When do we remove data from a cache

- When do we add data into a cache(load data into the cache)

Removing and adding data into the cache largely depend on your cache policy

LRU - Least recently use: Whenever you want to add data into the cache, add the data at the top of the cache, When you want to remove the data from the cache, remove it from the bottom of the cache

LFU - Least frequently used when you want to add new data into the cache and the cache is full, we remove the least frequently used data in order to give room for the new incoming data. Read More about LFU Here

How close can we put the cache, close to the server or close to the database

If you have a small cache, then it is best we keep the cache close to the server, but what happens when the server crash The basic benefit of keeping cache close to the server is to increase fast response.

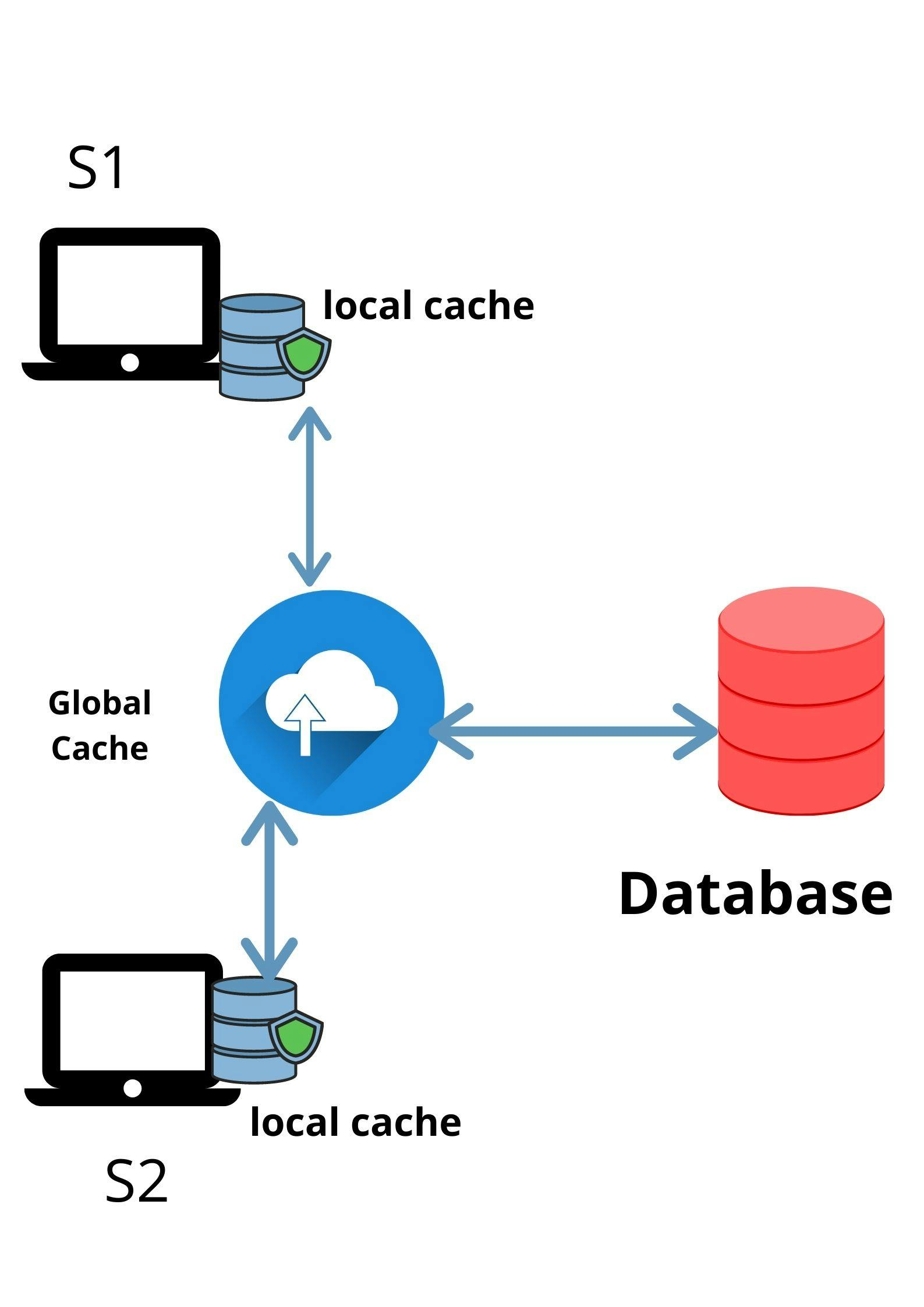

If we have a distributed system, it is best we deploy global cache[Redis], which seat between server and database, then each of our servers will have a small cache[local cache], such that if there is a miss in the global cache, we fetch the data from the DB,

So whenever any one of our distributed systems is down, we will still have access to its cache data from the global cache. The benefit of global cache, is you are avoiding queries on the database, but increasing queries on the Global cache